Thursday, April 18, 2024 From rOpenSci (https://ropensci.org/blog/2024/04/18/champions-program-2024/). Except where otherwise noted, content on this site is licensed under the CC-BY license.

The goal of the rOpenSci Champions Program is to enable more members of historically excluded groups to participate in, benefit from, and become leaders in the R, research software engineering, and open source and open science communities. This program includes 1-on-1 mentoring for the Champions as they complete a project and perform outreach activities in their local communities.

Every year, rOpenSci opens a call for applications for the roles of Champions and Mentors which are selected following a rigorous process that we describe in this blog post.

🔗 Review process

The Champions selection process is designed to promote equity and diversity among participants. It involves five steps:

Initial Application Review: The journey begins with rOpenSci staff examining all submissions to verify the eligibility and technical specifics of each application. This ensures that every candidate meets the basic criteria for consideration.

Community Revision: Each application undergoes a detailed assessment by two members of the rOpenSci community, including the current mentors of the Champions Program. In this step, reviewers use a rubric to guarantee objective and thorough revisions based on commonly established criteria1.

Consistency Analysis: A quantitative analysis is conducted to examine the consensus among reviewers. This step ensures that revisions are aligned, promoting fairness and minimizing bias in the selection process.

Diversity Review: With a focus on diversity, the list of candidates with higher scores is then carefully reviewed to ensure it reflects a broad spectrum of backgrounds and regions. This may involve adding promising candidates to the pool to achieve a truly representative group of potential Champions.

Final Selection by Mentors: The culmination of the process consists of mentors reviewing the top candidates to select their mentees.

This process is central to our desire to select a diverse group of Champions who are deeply committed to our community values.

We believe that involving mentors in the selection process significantly enhances the mentor-mentee relationship. Mentors’ insights into the selection process ensure a more effective and synergistic pairing. This involvement is crucial for identifying the projects that resonate most with mentors, thereby facilitating a more informed and meaningful final match.

🔗 Selection criteria: Cultivating a diverse community

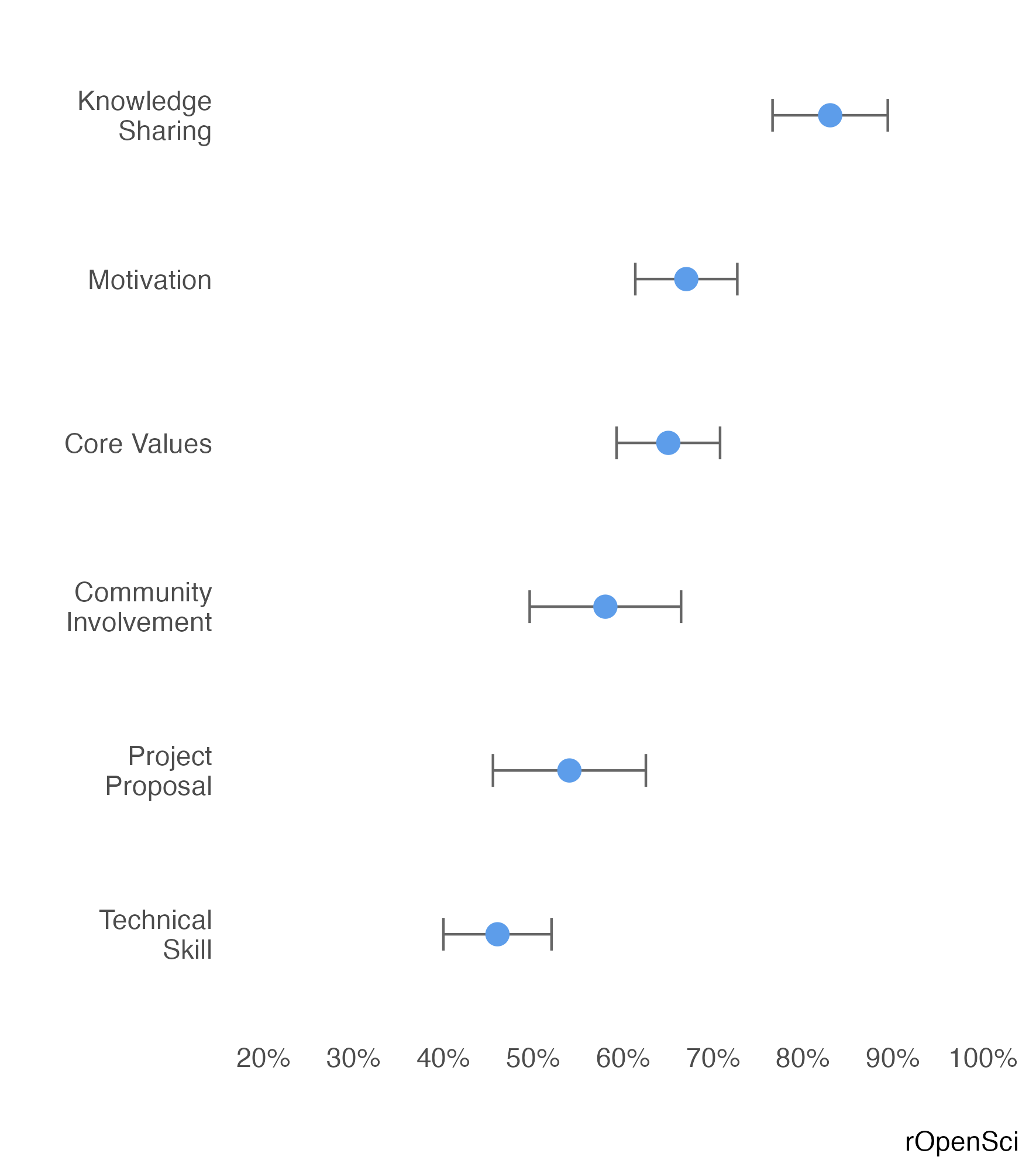

The second step in the review process is the core of the selection of Champions. Each application is revised and scored based on the following categories:

Core Values: A strong adherence to values like respect, inclusiveness, and collaboration.

Community Involvement: Active participation in communities of practice, understood as a group of people exchanging knowledge. They can be communities in institutions and organizations (such as institutes, foundations or universities); non-profit organizations (such as R-Ladies or The Carpentries) or companies. We look for communities related to STEM, Open Science, Research Software Engineer, and the R community.

Project Proposal: Clarity, feasibility, and innovation of the project proposal.

Knowledge Sharing: A concrete plan to disseminate acquired skills and knowledge within and beyond rOpenSci.

Technical Skill: Proficiency in necessary technical areas. The program is not for beginner or expert R developers.

Motivation: Enthusiasm for joining and contributing to the rOpenSci community and willingness to dedicate adequate time to the program, considering existing professional obligations.

Given that we collect two scores for each application, to minimize bias inherent to individual reviewers, we perform an agreement analysis based on the scores we receive. This analysis involves calculating Cohen’s Kappa, a score that assesses the level of agreement beyond chance2. Based on this score, we may adjust the scoring options of the rubric to enhance clarity and agreement. For instance, if a specific category consistently shows low agreement with a 1-5 scale, we might simplify the scale to 1-3. This iterative process continues until we achieve satisfactory agreement levels or determine that further revisions to the evaluations are necessary.

🔗 General results of the 2024 Champions applications

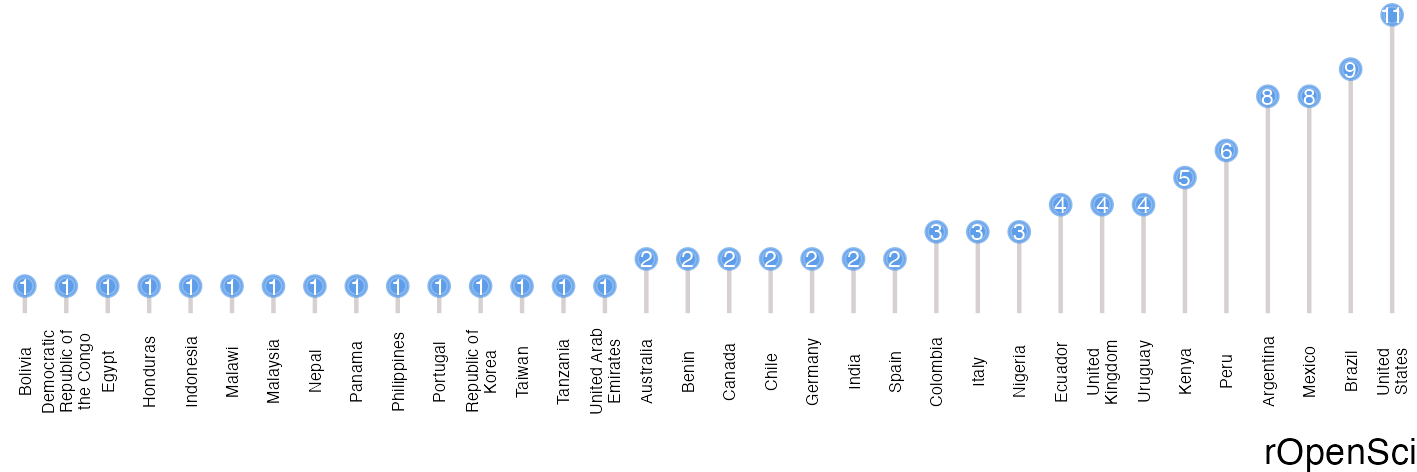

We received a total of 97 applications. Out of these, 66 meet the basic criteria of the call. These applications originated from 34 different countries and represent a diverse array of backgrounds, encompassing academia, industry, and government sectors.

🔗 General characteristics of the applicants

Most of the applicants were from the United States, followed by Brazil, Mexico and Argentina. It suggests a strong presence of the program in North and South America. However, we also received applications from countries in Africa, Asia, Europe and Oceania, indicating that we are approaching our goal of having a global reach in the Champions Program.

Of the participants who reported pronouns, 56.7% mentioned she/her and 43.3% mentioned he/him. A number of participants did not report pronouns (38.1%).

Applicants also reported a range of preferred languages (9 different languages were mentioned). The most common language was English (77), followed by Spanish (48) and Portuguese (14). Other languages included French, Arabic, Catalan, German, Hindi and Korean.

🔗 Scores

The majority of proposals received high marks in Knowledge Sharing (83%), Motivation (67%), and Alignment with Core Values (65%). These findings underscore a unique characteristic of our program: the applicants’ commitment to resource sharing for communal benefit and their eagerness to engage with our initiatives.

It is noteworthy that our program does not mandate advanced technical expertise or specialization in their fields from the applicants, a fact that is mirrored in the rubric scores where the majority of proposals scored less than 50% on Technical Skills.

🔗 Key insights

In 2024, the Champions Program attracted a global cohort of applicants, underscoring its expanding influence and the increasing recognition of its value across different sectors and continents.

The rOpenSci Champions Program has made an intentional effort to foster inclusivity and diversity within the R Community. Through a multi-step selection process, we strive for fairness and representation from diverse demographics and geographic regions.

Utilizing the rubric and conducting thorough data analysis enable a balanced selection of participants, effectively bridging the gap between experts and beginners. This approach is instrumental in identifying and cultivating the talents we aim to nurture within the program.

Transparency throughout the evaluation process is paramount in upholding the principles of fairness and equity. By openly communicating the criteria and methodology of our selection process, we aim to foster trust and accountability, reinforcing our commitment to inclusivity and diversity.

As we move forward, we understand that diversifying our community and increasing inclusivity is a process. Thus, the rOpenSci Champions Program remains committed to adapting and refining its processes to meet the evolving needs of its community and to address the challenges and opportunities in open science and research software development. We are inspired by the enthusiasm and caliber of the applications received and are excited about the potential of our new Champions to lead and innovate.

🔗 Acknowledgements

The inaugural cohort of the rOpenSci Champions Program was funded by the Chan Zuckerberg Initiative and led by Yani Bellini Saibene. It was co-designed with input from Camille Santistevan and Lou Woodley at the Center for Scientific Collaboration and Community Engagement CSCCE, who contributed to the rubric discussed in this blog post. Francisco Cardozo led the analysis of the robustness of the application review process.

🔗 Further reading

- General Blog Posts related to the Champions Program

- Champions Program

- rOpenSci Champions Pilot Year: Projects Wrap-Up

- 2023 rOpensci Champions Program: My Experience

- CSCCE guidebook describing the role of community champions

The rubric is designed to be flexible and adaptable to the specific needs of the program. We may adjust the scoring options based on the agreement analysis to enhance clarity and agreement. ↩︎

Cohen’s Kappa is a tool used to measure how much two raters agree on categorizing items. Unlike simply looking at how often they agree, Kappa also considers the chance that they might agree just by guessing. Initially, we found the agreement rate to be 0.48. However, after making adjustments to how we score, the agreement rate improved to 0.53. This suggests that the raters are more in sync than before. Kappa values can range from 0, meaning there’s no real agreement other than what might happen by random chance, to 1, indicating perfect agreement. In our case, a Kappa of 0.53 shows that our raters agree to a satisfactory extent. ↩︎